|

Jacobi

|

|

Jacobi

|

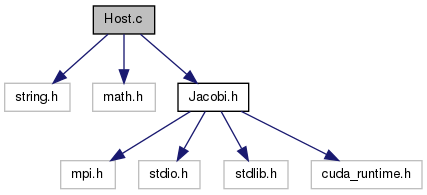

This contains the host functions for data allocations, message passing and host-side computations. More...

Functions | |

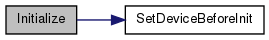

| void | Initialize (int *argc, char ***argv, int *rank, int *size) |

| Initialize the MPI environment, allowing the CUDA device to be selected before (if necessary) | |

| void | Finalize (real *devBlocks[2], real *devSideEdges[2], real *devHaloLines[2], real *hostSendLines[2], real *hostRecvLines[2], real *devResidue, cudaStream_t copyStream) |

| Close (finalize) the MPI environment and deallocate buffers. | |

| int | ApplyTopology (int *rank, int size, const int2 *topSize, int *neighbors, int2 *topIndex, MPI_Comm *cartComm) |

| Generates the 2D topology and establishes the neighbor relationships between MPI processes. | |

| void | InitializeDataChunk (int topSizeY, int topIdxY, const int2 *domSize, const int *neighbors, cudaStream_t *copyStream, real *devBlocks[2], real *devSideEdges[2], real *devHaloLines[2], real *hostSendLines[2], real *hostRecvLines[2], real **devResidue) |

| This allocates and initializes all the relevant data buffers before the Jacobi run. | |

| void | PreRunJacobi (MPI_Comm cartComm, int rank, int size, double *timerStart) |

| This function is called immediately before the main Jacobi loop. | |

| void | PostRunJacobi (MPI_Comm cartComm, int rank, int size, const int2 *topSize, const int2 *domSize, int iterations, int useFastSwap, double timerStart, double avgTransferTime) |

| This function is called immediately after the main Jacobi loop. | |

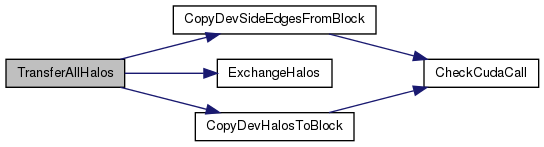

| double | TransferAllHalos (MPI_Comm cartComm, const int2 *domSize, const int2 *topIndex, const int *neighbors, cudaStream_t copyStream, real *devBlocks[2], real *devSideEdges[2], real *devHaloLines[2], real *hostSendLines[2], real *hostRecvLines[2]) |

| This performs the exchanging of all necessary halos between 2 neighboring MPI processes. | |

| void | RunJacobi (MPI_Comm cartComm, int rank, int size, const int2 *domSize, const int2 *topIndex, const int *neighbors, int useFastSwap, real *devBlocks[2], real *devSideEdges[2], real *devHaloLines[2], real *hostSendLines[2], real *hostRecvLines[2], real *devResidue, cudaStream_t copyStream, int *iterations, double *avgTransferTime) |

| This is the main Jacobi loop, which handles device computation and data exchange between MPI processes. | |

This contains the host functions for data allocations, message passing and host-side computations.

| int ApplyTopology | ( | int * | rank, |

| int | size, | ||

| const int2 * | topSize, | ||

| int * | neighbors, | ||

| int2 * | topIndex, | ||

| MPI_Comm * | cartComm | ||

| ) |

Generates the 2D topology and establishes the neighbor relationships between MPI processes.

| [in,out] | rank | The rank of the calling MPI process |

| [in] | size | The total number of MPI processes available |

| [in] | topSize | The desired topology size (this must match the number of available MPI processes) |

| [out] | neighbors | The list that will be populated with the direct neighbors of the calling MPI process |

| [out] | topIndex | The 2D index that the calling MPI process will have in the topology |

| [out] | cartComm | The carthesian MPI communicator |

| void Finalize | ( | real * | devBlocks[2], |

| real * | devSideEdges[2], | ||

| real * | devHaloLines[2], | ||

| real * | hostSendLines[2], | ||

| real * | hostRecvLines[2], | ||

| real * | devResidue, | ||

| cudaStream_t | copyStream | ||

| ) |

Close (finalize) the MPI environment and deallocate buffers.

| [in] | devBlocks | The 2 device blocks that were used during the Jacobi iterations |

| [in] | devSideEdges | The 2 device side edges that were used to hold updated halos before sending |

| [in] | devHaloLines | The 2 device lines that were used to hold received halos |

| [in] | hostSendLines | The 2 host send buffers that were used at halo exchange in the normal CUDA & MPI version |

| [in] | hostRecvLines | The 2 host receive buffers that were used at halo exchange in the normal CUDA & MPI version |

| [in] | devResidue | The global residue, kept in device memory |

| [in] | copyStream | The stream used to overlap top & bottom halo exchange with side halo copy to host memory |

| void Initialize | ( | int * | argc, |

| char *** | argv, | ||

| int * | rank, | ||

| int * | size | ||

| ) |

Initialize the MPI environment, allowing the CUDA device to be selected before (if necessary)

| [in,out] | argc | The number of command-line arguments |

| [in,out] | argv | The list of command-line arguments |

| [out] | rank | The global rank of the current MPI process |

| [out] | size | The total number of MPI processes available |

| void InitializeDataChunk | ( | int | topSizeY, |

| int | topIdxY, | ||

| const int2 * | domSize, | ||

| const int * | neighbors, | ||

| cudaStream_t * | copyStream, | ||

| real * | devBlocks[2], | ||

| real * | devSideEdges[2], | ||

| real * | devHaloLines[2], | ||

| real * | hostSendLines[2], | ||

| real * | hostRecvLines[2], | ||

| real ** | devResidue | ||

| ) |

This allocates and initializes all the relevant data buffers before the Jacobi run.

| [in] | topSizeY | The size of the topology in the Y direction |

| [in] | topIdxY | The Y index of the calling MPI process in the topology |

| [in] | domSize | The size of the local domain (for which only the current MPI process is responsible) |

| [in] | neighbors | The neighbor ranks, according to the topology |

| [in] | copyStream | The stream used to overlap top & bottom halo exchange with side halo copy to host memory |

| [out] | devBlocks | The 2 device blocks that will be updated during the Jacobi run |

| [out] | devSideEdges | The 2 side edges (parallel to the Y direction) that will hold the packed halo values before sending them |

| [out] | devHaloLines | The 2 halo lines (parallel to the Y direction) that will hold the packed halo values after receiving them |

| [out] | hostSendLines | The 2 host send buffers that will be used during the halo exchange by the normal CUDA & MPI version |

| [out] | hostRecvLines | The 2 host receive buffers that will be used during the halo exchange by the normal CUDA & MPI version |

| [out] | devResidue | The global device residue, which will be updated after every Jacobi iteration |

| void PostRunJacobi | ( | MPI_Comm | cartComm, |

| int | rank, | ||

| int | size, | ||

| const int2 * | topSize, | ||

| const int2 * | domSize, | ||

| int | iterations, | ||

| int | useFastSwap, | ||

| double | timerStart, | ||

| double | avgTransferTime | ||

| ) |

This function is called immediately after the main Jacobi loop.

| [in] | cartComm | The carthesian communicator |

| [in] | rank | The rank of the calling MPI process |

| [in] | topSize | The size of the topology |

| [in] | domSize | The size of the local domain |

| [in] | iterations | The number of successfully completed Jacobi iterations |

| [in] | useFastSwap | The flag indicating if fast pointer swapping was used to exchange blocks |

| [in] | timerStart | The Jacobi loop starting moment (measured as wall-time) |

| [in] | avgTransferTime | The average time spent performing MPI transfers (per process) |

| void PreRunJacobi | ( | MPI_Comm | cartComm, |

| int | rank, | ||

| int | size, | ||

| double * | timerStart | ||

| ) |

This function is called immediately before the main Jacobi loop.

| [in] | cartComm | The carthesian communicator |

| [in] | rank | The rank of the calling MPI process |

| [in] | size | The total number of MPI processes available |

| [out] | timerStart | The Jacobi loop starting moment (measured as wall-time) |

| void RunJacobi | ( | MPI_Comm | cartComm, |

| int | rank, | ||

| int | size, | ||

| const int2 * | domSize, | ||

| const int2 * | topIndex, | ||

| const int * | neighbors, | ||

| int | useFastSwap, | ||

| real * | devBlocks[2], | ||

| real * | devSideEdges[2], | ||

| real * | devHaloLines[2], | ||

| real * | hostSendLines[2], | ||

| real * | hostRecvLines[2], | ||

| real * | devResidue, | ||

| cudaStream_t | copyStream, | ||

| int * | iterations, | ||

| double * | avgTransferTime | ||

| ) |

This is the main Jacobi loop, which handles device computation and data exchange between MPI processes.

| [in] | cartComm | The carthesian MPI communicator |

| [in] | rank | The rank of the calling MPI process |

| [in] | size | The number of available MPI processes |

| [in] | domSize | The 2D size of the local domain |

| [in] | topIndex | The 2D index of the calling MPI process in the topology |

| [in] | neighbors | The list of ranks which are direct neighbors to the caller |

| [in] | useFastSwap | This flag indicates if blocks should be swapped through pointer copy (faster) or through element-by-element copy (slower) |

| [in,out] | devBlocks | The 2 device blocks that are updated during the Jacobi run |

| [in,out] | devSideEdges | The 2 side edges (parallel to the Y direction) that hold the packed halo values before sending them |

| [in,out] | devHaloLines | The 2 halo lines (parallel to the Y direction) that hold the packed halo values after receiving them |

| [in,out] | hostSendLines | The 2 host send buffers that are used during the halo exchange by the normal CUDA & MPI version |

| [in,out] | hostRecvLines | The 2 host receive buffers that are used during the halo exchange by the normal CUDA & MPI version |

| [in,out] | devResidue | The global device residue, which gets updated after every Jacobi iteration |

| [in] | copyStream | The stream used to overlap top & bottom halo exchange with side halo copy to host memory |

| [out] | iterations | The number of successfully completed iterations |

| [out] | avgTransferTime | The average time spent performing MPI transfers (per process) |

| double TransferAllHalos | ( | MPI_Comm | cartComm, |

| const int2 * | domSize, | ||

| const int2 * | topIndex, | ||

| const int * | neighbors, | ||

| cudaStream_t | copyStream, | ||

| real * | devBlocks[2], | ||

| real * | devSideEdges[2], | ||

| real * | devHaloLines[2], | ||

| real * | hostSendLines[2], | ||

| real * | hostRecvLines[2] | ||

| ) |

This performs the exchanging of all necessary halos between 2 neighboring MPI processes.

| [in] | cartComm | The carthesian MPI communicator |

| [in] | domSize | The 2D size of the local domain |

| [in] | topIndex | The 2D index of the calling MPI process in the topology |

| [in] | neighbors | The list of ranks which are direct neighbors to the caller |

| [in] | copyStream | The stream used to overlap top & bottom halo exchange with side halo copy to host memory |

| [in,out] | devBlocks | The 2 device blocks that are updated during the Jacobi run |

| [in,out] | devSideEdges | The 2 side edges (parallel to the Y direction) that hold the packed halo values before sending them |

| [in,out] | devHaloLines | The 2 halo lines (parallel to the Y direction) that hold the packed halo values after receiving them |

| [in,out] | hostSendLines | The 2 host send buffers that are used during the halo exchange by the normal CUDA & MPI version |

| [in,out] | hostRecvLines | The 2 host receive buffers that are used during the halo exchange by the normal CUDA & MPI version |

1.7.6.1

1.7.6.1