|

Jacobi

|

|

Jacobi

|

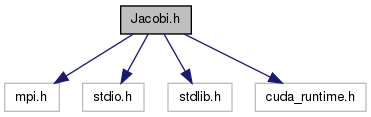

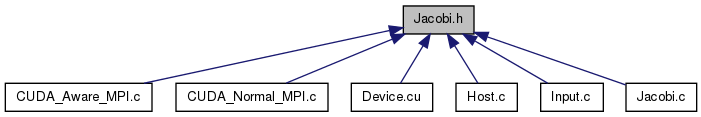

The header containing the most relevant functions for the Jacobi solver. More...

#include <mpi.h>#include <stdio.h>#include <stdlib.h>#include <cuda_runtime.h>

Defines | |

| #define | USE_FLOAT 0 |

| #define | DEFAULT_DOMAIN_SIZE 4096 |

| #define | MIN_DOM_SIZE 3 |

| #define | ENV_LOCAL_RANK "MV2_COMM_WORLD_LOCAL_RANK" |

| #define | MPI_MASTER_RANK 0 |

| #define | JACOBI_TOLERANCE 1.0E-5F |

| #define | JACOBI_MAX_LOOPS 1000 |

| #define | STATUS_OK 0 |

| #define | STATUS_ERR -1 |

Functions | |

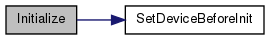

| void | Initialize (int *argc, char ***argv, int *rank, int *size) |

| Initialize the MPI environment, allowing the CUDA device to be selected before (if necessary) | |

| void | Finalize (real *devBlocks[2], real *devSideEdges[2], real *devHaloLines[2], real *hostSendLines[2], real *hostRecvLines[2], real *devResidue, cudaStream_t copyStream) |

| Close (finalize) the MPI environment and deallocate buffers. | |

| int | ParseCommandLineArguments (int argc, char **argv, int rank, int size, int2 *domSize, int2 *topSize, int *useFastSwap) |

| Parses the application's command-line arguments. | |

| int | ApplyTopology (int *rank, int size, const int2 *topSize, int *neighbors, int2 *topIndex, MPI_Comm *cartComm) |

| Generates the 2D topology and establishes the neighbor relationships between MPI processes. | |

| void | InitializeDataChunk (int topSizeY, int topIdxY, const int2 *domSize, const int *neighbors, cudaStream_t *copyStream, real *devBlocks[2], real *devSideEdges[2], real *devHaloLines[2], real *hostSendLines[2], real *hostRecvLines[2], real **devResidue) |

| This allocates and initializes all the relevant data buffers before the Jacobi run. | |

| void | PreRunJacobi (MPI_Comm cartComm, int rank, int size, double *timerStart) |

| This function is called immediately before the main Jacobi loop. | |

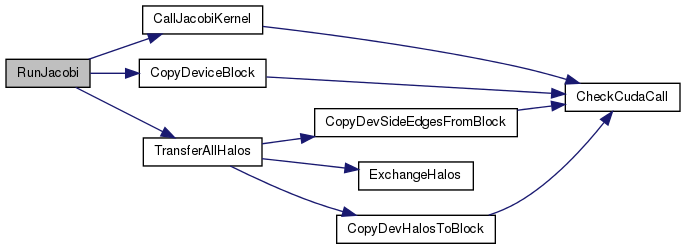

| void | RunJacobi (MPI_Comm cartComm, int rank, int size, const int2 *domSize, const int2 *topIndex, const int *neighbors, int useFastSwap, real *devBlocks[2], real *devSideEdges[2], real *devHaloLines[2], real *hostSendLines[2], real *hostRecvLines[2], real *devResidue, cudaStream_t copyStream, int *iterations, double *avgTransferTime) |

| This is the main Jacobi loop, which handles device computation and data exchange between MPI processes. | |

| void | PostRunJacobi (MPI_Comm cartComm, int rank, int size, const int2 *topSize, const int2 *domSize, int iterations, int useFastSwap, double timerStart, double avgTransferTime) |

| This function is called immediately after the main Jacobi loop. | |

| void | SetDeviceBeforeInit () |

| This allows the MPI process to set the CUDA device before the MPI environment is initialized For the CUDA-aware MPI version, the is the only place where the device gets set. In order to do this, we rely on the node's local rank, as the MPI environment has not been initialized yet. | |

| void | SetDeviceAfterInit (int rank) |

| This allows the MPI process to set the CUDA device after the MPI environment is initialized For the CUDA-aware MPI version, there is nothing to be done here. | |

| void | ExchangeHalos (MPI_Comm cartComm, real *devSend, real *hostSend, real *hostRecv, real *devRecv, int neighbor, int elemCount) |

| Exchange halo values between 2 direct neighbors This is the main difference between the normal CUDA & MPI version and the CUDA-aware MPI version. In the former, the exchange first requires a copy from device to host memory, an MPI call using the host buffer and lastly, a copy of the received host buffer back to the device memory. In the latter, the host buffers are completely skipped, as the MPI environment uses the device buffers directly. | |

| void | CheckCudaCall (cudaError_t command, const char *commandName, const char *fileName, int line) |

| The host function for checking the result of a CUDA API call. | |

| real | CallJacobiKernel (real *devBlocks[2], real *devResidue, const int4 *bounds, const int2 *size) |

| The host wrapper for one Jacobi iteration. | |

| void | CopyDeviceBlock (real *devBlocks[2], const int4 *bounds, const int2 *size) |

| The host wrapper for copying the updated block over the old one, after a Jacobi iteration finishes. | |

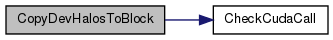

| void | CopyDevHalosToBlock (real *devBlock, const real *devHaloLineLeft, const real *devHaloLineRight, const int2 *size, const int *neighbors) |

| The host wrapper for copying (unpacking) the values from the halo buffers to the left and right side of the data block. | |

| void | CopyDevSideEdgesFromBlock (const real *devBlock, real *devSideEdges[2], const int2 *size, const int *neighbors, cudaStream_t copyStream) |

| The host wrapper for copying (packing) the values on the left and right side of the data block to separate, contiguous buffers. | |

The header containing the most relevant functions for the Jacobi solver.

| #define DEFAULT_DOMAIN_SIZE 4096 |

This is the default domain size (when not explicitly stated with "-d" in the command-line arguments).

| #define ENV_LOCAL_RANK "MV2_COMM_WORLD_LOCAL_RANK" |

This is the environment variable which allows the reading of the local rank of the current MPI process before the MPI environment gets initialized with MPI_Init(). This is necessary when running the CUDA-aware MPI version of the Jacobi solver, which needs this information in order to be able to set the CUDA device for the MPI process before MPI environment initialization. If you are using MVAPICH2, set this constant to "MV2_COMM_WORLD_LOCAL_RANK"; for Open MPI, use "OMPI_COMM_WORLD_LOCAL_RANK".

| #define JACOBI_MAX_LOOPS 1000 |

This is the Jacobi iteration count limit. The Jacobi run will never cycle more than this, even if it has not converged when finishing the last allowed iteration.

| #define JACOBI_TOLERANCE 1.0E-5F |

This is the Jacobi tolerance threshold. The run is considered to have converged when the maximum residue falls below this value.

| #define MIN_DOM_SIZE 3 |

This is the minimum acceptable domain size in any of the 2 dimensions.

| #define MPI_MASTER_RANK 0 |

This is the global rank of the root (master) process; in this application, it is mostly relevant for printing purposes.

| #define STATUS_ERR -1 |

This is the status value that indicates an error.

| #define STATUS_OK 0 |

This is the status value that indicates a successful operation.

| #define USE_FLOAT 0 |

Setting this to 1 makes the application use only single-precision floating-point data. Set this to 0 in order to use double-precision floating-point data instead.

| int ApplyTopology | ( | int * | rank, |

| int | size, | ||

| const int2 * | topSize, | ||

| int * | neighbors, | ||

| int2 * | topIndex, | ||

| MPI_Comm * | cartComm | ||

| ) |

Generates the 2D topology and establishes the neighbor relationships between MPI processes.

| [in,out] | rank | The rank of the calling MPI process |

| [in] | size | The total number of MPI processes available |

| [in] | topSize | The desired topology size (this must match the number of available MPI processes) |

| [out] | neighbors | The list that will be populated with the direct neighbors of the calling MPI process |

| [out] | topIndex | The 2D index that the calling MPI process will have in the topology |

| [out] | cartComm | The carthesian MPI communicator |

| real CallJacobiKernel | ( | real * | devBlocks[2], |

| real * | devResidue, | ||

| const int4 * | bounds, | ||

| const int2 * | size | ||

| ) |

The host wrapper for one Jacobi iteration.

| [in,out] | devBlocks | The 2 blocks involved: the first is the current one, the second is the one to be updated |

| [out] | devResidue | The global residue that is to be updated through the Jacobi iteration |

| [in] | bounds | The bounds of the rectangular block region that holds only computable values |

| [in] | size | The 2D size of data blocks, excluding the edges which hold the halo values |

| void CheckCudaCall | ( | cudaError_t | command, |

| const char * | commandName, | ||

| const char * | fileName, | ||

| int | line | ||

| ) |

The host function for checking the result of a CUDA API call.

| [in] | command | The result of the previously-issued CUDA API call |

| [in] | commandName | The name of the issued API call |

| [in] | fileName | The name of the file where the API call occurred |

| [in] | line | The line in the file where the API call occurred |

| void CopyDevHalosToBlock | ( | real * | devBlock, |

| const real * | devHaloLineLeft, | ||

| const real * | devHaloLineRight, | ||

| const int2 * | size, | ||

| const int * | neighbors | ||

| ) |

The host wrapper for copying (unpacking) the values from the halo buffers to the left and right side of the data block.

| [out] | devBlock | The 2D device block that will contain the halo values after unpacking |

| [in] | devHaloLineLeft | The halo buffer for the left side of the data block |

| [in] | devHaloLineRight | The halo buffer for the right side of the data block |

| [in] | size | The 2D size of data block, excluding the edges which hold the halo values |

| [in] | neighbors | The ranks of the neighboring MPI processes |

| void CopyDeviceBlock | ( | real * | devBlocks[2], |

| const int4 * | bounds, | ||

| const int2 * | size | ||

| ) |

The host wrapper for copying the updated block over the old one, after a Jacobi iteration finishes.

| [in,out] | devBlocks | The 2 blocks involved: the first is the old one, the second is the updated one |

| [in] | bounds | The bounds of the rectangular updated region (holding only computable values) |

| [in] | size | The 2D size of data blocks, excluding the edges which hold the halo values |

| void CopyDevSideEdgesFromBlock | ( | const real * | devBlock, |

| real * | devSideEdges[2], | ||

| const int2 * | size, | ||

| const int * | neighbors, | ||

| cudaStream_t | copyStream | ||

| ) |

The host wrapper for copying (packing) the values on the left and right side of the data block to separate, contiguous buffers.

| [in] | devBlock | The 2D device block containing the updated values after a Jacobi run |

| [out] | devSideEdges | The buffers where the edge values will be packed in |

| [in] | size | The 2D size of data block, excluding the edges which hold the halo values for the next iteration |

| [in] | neighbors | The ranks of the neighboring MPI processes |

| [in] | copyStream | The stream on which this kernel will be executed |

| void ExchangeHalos | ( | MPI_Comm | cartComm, |

| real * | devSend, | ||

| real * | hostSend, | ||

| real * | hostRecv, | ||

| real * | devRecv, | ||

| int | neighbor, | ||

| int | elemCount | ||

| ) |

Exchange halo values between 2 direct neighbors This is the main difference between the normal CUDA & MPI version and the CUDA-aware MPI version. In the former, the exchange first requires a copy from device to host memory, an MPI call using the host buffer and lastly, a copy of the received host buffer back to the device memory. In the latter, the host buffers are completely skipped, as the MPI environment uses the device buffers directly.

| [in] | cartComm | The carthesian MPI communicator |

| [in] | devSend | The device buffer that needs to be sent |

| [in] | hostSend | The host buffer where the device buffer is first copied to (not needed here) |

| [in] | hostRecv | The host buffer that receives the halo values (not needed here) |

| [in] | devRecv | The device buffer that receives the halo buffers directly |

| [in] | neighbor | The rank of the neighbor MPI process in the carthesian communicator |

| [in] | elemCount | The number of elements to transfer |

| [in] | cartComm | The carthesian MPI communicator |

| [in] | devSend | The device buffer that needs to be sent |

| [in] | hostSend | The host buffer where the device buffer is first copied to |

| [in] | hostRecv | The host buffer that receives the halo values directly |

| [in] | devRecv | The device buffer where the receiving host buffer is copied to |

| [in] | neighbor | The rank of the neighbor MPI process in the carthesian communicator |

| [in] | elemCount | The number of elements to transfer |

| void Finalize | ( | real * | devBlocks[2], |

| real * | devSideEdges[2], | ||

| real * | devHaloLines[2], | ||

| real * | hostSendLines[2], | ||

| real * | hostRecvLines[2], | ||

| real * | devResidue, | ||

| cudaStream_t | copyStream | ||

| ) |

Close (finalize) the MPI environment and deallocate buffers.

| [in] | devBlocks | The 2 device blocks that were used during the Jacobi iterations |

| [in] | devSideEdges | The 2 device side edges that were used to hold updated halos before sending |

| [in] | devHaloLines | The 2 device lines that were used to hold received halos |

| [in] | hostSendLines | The 2 host send buffers that were used at halo exchange in the normal CUDA & MPI version |

| [in] | hostRecvLines | The 2 host receive buffers that were used at halo exchange in the normal CUDA & MPI version |

| [in] | devResidue | The global residue, kept in device memory |

| [in] | copyStream | The stream used to overlap top & bottom halo exchange with side halo copy to host memory |

| void Initialize | ( | int * | argc, |

| char *** | argv, | ||

| int * | rank, | ||

| int * | size | ||

| ) |

Initialize the MPI environment, allowing the CUDA device to be selected before (if necessary)

| [in,out] | argc | The number of command-line arguments |

| [in,out] | argv | The list of command-line arguments |

| [out] | rank | The global rank of the current MPI process |

| [out] | size | The total number of MPI processes available |

| void InitializeDataChunk | ( | int | topSizeY, |

| int | topIdxY, | ||

| const int2 * | domSize, | ||

| const int * | neighbors, | ||

| cudaStream_t * | copyStream, | ||

| real * | devBlocks[2], | ||

| real * | devSideEdges[2], | ||

| real * | devHaloLines[2], | ||

| real * | hostSendLines[2], | ||

| real * | hostRecvLines[2], | ||

| real ** | devResidue | ||

| ) |

This allocates and initializes all the relevant data buffers before the Jacobi run.

| [in] | topSizeY | The size of the topology in the Y direction |

| [in] | topIdxY | The Y index of the calling MPI process in the topology |

| [in] | domSize | The size of the local domain (for which only the current MPI process is responsible) |

| [in] | neighbors | The neighbor ranks, according to the topology |

| [in] | copyStream | The stream used to overlap top & bottom halo exchange with side halo copy to host memory |

| [out] | devBlocks | The 2 device blocks that will be updated during the Jacobi run |

| [out] | devSideEdges | The 2 side edges (parallel to the Y direction) that will hold the packed halo values before sending them |

| [out] | devHaloLines | The 2 halo lines (parallel to the Y direction) that will hold the packed halo values after receiving them |

| [out] | hostSendLines | The 2 host send buffers that will be used during the halo exchange by the normal CUDA & MPI version |

| [out] | hostRecvLines | The 2 host receive buffers that will be used during the halo exchange by the normal CUDA & MPI version |

| [out] | devResidue | The global device residue, which will be updated after every Jacobi iteration |

| int ParseCommandLineArguments | ( | int | argc, |

| char ** | argv, | ||

| int | rank, | ||

| int | size, | ||

| int2 * | domSize, | ||

| int2 * | topSize, | ||

| int * | useFastSwap | ||

| ) |

Parses the application's command-line arguments.

| [in] | argc | The number of input arguments |

| [in] | argv | The input arguments |

| [in] | rank | The MPI rank of the calling process |

| [in] | size | The total number of MPI processes available |

| [out] | domSize | The parsed domain size (2D) |

| [out] | topSize | The parsed topology size (2D) |

| [out] | useFastSwap | The parsed flag for fast block swap |

| void PostRunJacobi | ( | MPI_Comm | cartComm, |

| int | rank, | ||

| int | size, | ||

| const int2 * | topSize, | ||

| const int2 * | domSize, | ||

| int | iterations, | ||

| int | useFastSwap, | ||

| double | timerStart, | ||

| double | avgTransferTime | ||

| ) |

This function is called immediately after the main Jacobi loop.

| [in] | cartComm | The carthesian communicator |

| [in] | rank | The rank of the calling MPI process |

| [in] | topSize | The size of the topology |

| [in] | domSize | The size of the local domain |

| [in] | iterations | The number of successfully completed Jacobi iterations |

| [in] | useFastSwap | The flag indicating if fast pointer swapping was used to exchange blocks |

| [in] | timerStart | The Jacobi loop starting moment (measured as wall-time) |

| [in] | avgTransferTime | The average time spent performing MPI transfers (per process) |

| void PreRunJacobi | ( | MPI_Comm | cartComm, |

| int | rank, | ||

| int | size, | ||

| double * | timerStart | ||

| ) |

This function is called immediately before the main Jacobi loop.

| [in] | cartComm | The carthesian communicator |

| [in] | rank | The rank of the calling MPI process |

| [in] | size | The total number of MPI processes available |

| [out] | timerStart | The Jacobi loop starting moment (measured as wall-time) |

| void RunJacobi | ( | MPI_Comm | cartComm, |

| int | rank, | ||

| int | size, | ||

| const int2 * | domSize, | ||

| const int2 * | topIndex, | ||

| const int * | neighbors, | ||

| int | useFastSwap, | ||

| real * | devBlocks[2], | ||

| real * | devSideEdges[2], | ||

| real * | devHaloLines[2], | ||

| real * | hostSendLines[2], | ||

| real * | hostRecvLines[2], | ||

| real * | devResidue, | ||

| cudaStream_t | copyStream, | ||

| int * | iterations, | ||

| double * | avgTransferTime | ||

| ) |

This is the main Jacobi loop, which handles device computation and data exchange between MPI processes.

| [in] | cartComm | The carthesian MPI communicator |

| [in] | rank | The rank of the calling MPI process |

| [in] | size | The number of available MPI processes |

| [in] | domSize | The 2D size of the local domain |

| [in] | topIndex | The 2D index of the calling MPI process in the topology |

| [in] | neighbors | The list of ranks which are direct neighbors to the caller |

| [in] | useFastSwap | This flag indicates if blocks should be swapped through pointer copy (faster) or through element-by-element copy (slower) |

| [in,out] | devBlocks | The 2 device blocks that are updated during the Jacobi run |

| [in,out] | devSideEdges | The 2 side edges (parallel to the Y direction) that hold the packed halo values before sending them |

| [in,out] | devHaloLines | The 2 halo lines (parallel to the Y direction) that hold the packed halo values after receiving them |

| [in,out] | hostSendLines | The 2 host send buffers that are used during the halo exchange by the normal CUDA & MPI version |

| [in,out] | hostRecvLines | The 2 host receive buffers that are used during the halo exchange by the normal CUDA & MPI version |

| [in,out] | devResidue | The global device residue, which gets updated after every Jacobi iteration |

| [in] | copyStream | The stream used to overlap top & bottom halo exchange with side halo copy to host memory |

| [out] | iterations | The number of successfully completed iterations |

| [out] | avgTransferTime | The average time spent performing MPI transfers (per process) |

| void SetDeviceAfterInit | ( | int | rank | ) |

This allows the MPI process to set the CUDA device after the MPI environment is initialized For the CUDA-aware MPI version, there is nothing to be done here.

| [in] | rank | The global rank of the calling MPI process |

This allows the MPI process to set the CUDA device after the MPI environment is initialized For the CUDA-aware MPI version, there is nothing to be done here.

| [in] | rank | The global rank of the calling MPI process |

1.7.6.1

1.7.6.1