Engineering Probability Class 6 Thurs 2021-02-11

Table of contents::

1 New teaching tool

Today's new gimmick is mirroring my ipad to a window on my laptop, so I can use the ipad as a graphics tablet. Relevant SW: uxplay on the laptop; see https://rodrigoribeiro.site/2020/08/09/mirroring-ipad-iphone-screen-on-linux/ and any notetaking tool on the ipad.

Earlier I tried using a laptop with a touch screen. That didn't work well, apparently because of deficiencies with linux SW controlling the screen.

2 Applied EE

My home Tesla powerwalls (27KHW capacity) and solar panels (8KW peak) are finally working. Over the year, my net electrical consumption will be close to zero. I can survive perhaps a 2-day blackout. However the real reason for getting them is that I like gadgets.

3 Leon Garcia, chapter 2, ctd

-

2.4 Conditional probability, page 47.

big topic

E.g., if it snows today, is it more likely to snow tomorrow? next week? in 6 months?

E.g., what is the probability of the stock market rising tomorrow given that (it went up today, the deficit went down, an oil pipeline was blown up, ...)?

What's the probability that a CF bulb is alive after 1000 hours given that I bought it at Walmart?

definition \(P[A|B] = \frac{P[A\cap B]}{P[B]}\)

-

E.g., if DARPA had been allowed to run its Futures Markets Applied to Prediction (FutureMAP) would the future probability of King Zog I being assassinated be dependent on the amount of money bet on that assassination occurring?

Is that good or bad?

Would knowing that the real Zog survived over 55 assassination attempts change the probability of a future assassination?

-

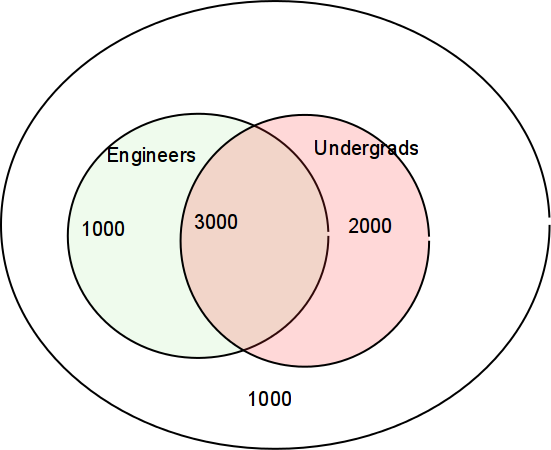

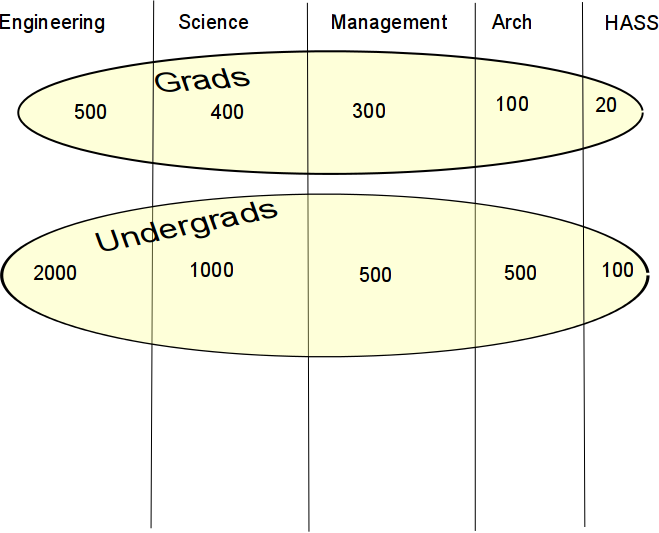

Consider a fictional university that has both undergrads and grads. It also has both Engineers and others:

\(P[A\cap B] = P[A|B]P[B] = P[B|A]P[A]\)

Example 2.26 Binary communication. Source transmits 0 with probability (1-p) and 1 with probability p. Receiver errs with probability e. What are probabilities of 4 events?

-

Total probability theorem

\(B_i\) mutually exclusive events whose union is S

P[A] = P[A \(\cap B_1\) + P[A \(\cap B_2\) + ...

\(P[A] = P[A|B_1]P[B_1]\) \(+ P[A|B_2]P[B_2] + ...\)

What's the probability that a student is an undergrad, given ... (Numbers are fictitious.)

-

Example 2.28. Chip quality control.

Each chip is either good or bad.

P[good]=(1-p), P[bad]=p.

If the chip is good: P[still alive at t] = \(e^{-at}\)

If the chip is bad: P[still alive at t] = \(e^{-1000at}\)

What's the probability that a random chip is still alive at t?

-

2.4.1, p52. Bayes' rule. This lets you invert the conditional probabilities.

-

\(B_j\) partition S. That means that

If \(i\ne j\) then \(B_i\cap B_j=\emptyset\) and

\(\bigcup_i B_i = S\)

\(P[B_j|A] = \frac{B_j\cap A}{P[A]}\) \(= \frac{P[A|B_j] P[B_j]}{\sum_k P[A|B_k] P[B_k]}\)

-

application:

We have a priori probs \(P[B_j]\)

Event A occurs. Knowing that A has happened gives us info that changes the probs.

Compute a posteriori probs \(P[B_j|A]\)

-

In the above diagram, what's the probability that an undergrad is an engineer?

Example 2.29 comm channel: If receiver sees 1, which input was more probable? (You hope the answer is 1.)

Example 2.30 chip quality control: For example 2.28, how long do we have to burn in chips so that the survivors have a 99% probability of being good? p=0.1, a=1/20000.

-

Example: False positives in a medical test

T = test for disease was positive; T' = .. negative

D = you have disease; D' = .. don't ..

P[T|D] = .99, P[T' | D'] = .95, P[D] = 0.001

P[D' | T] (false positive) = 0.98 !!!

-

Multinomial probability law

There are M different possible outcomes from an experiment, e.g., faces of a die showing.

Probability of particular outcome: \(p_i\)

Now run the experiment n times.

-

Probability that i-th outcome occurred \(k_i\) times, \(\sum_{i=1}^M k_i = n\)

\begin{equation*} P[(k_1,k_2,...,k_M)] = \frac{n!}{k_1! k_2! ... k_M!} p_1^{k_1} p_2^{k_2}...p_M^{k_M} \end{equation*}

Example 2.41 p63 dartboard.

Example 2.42 p63 random phone numbers.

-

2.7 Computer generation of random numbers

Skip this section, except for following points.

Executive summary: it's surprisingly hard to generate good random numbers. Commercial SW has been known to get this wrong. By now, they've gotten it right (I hope), so just call a subroutine.

Arizona lottery got it wrong in 1998.

Even random electronic noise is hard to use properly. The best selling 1955 book A Million Random Digits with 100,000 Normal Deviates had trouble generating random numbers this way. Asymmetries crept into their circuits perhaps because of component drift. For a laugh, read the reviews.

Pseudo-random number generator: The subroutine returns numbers according to some algorithm (e.g., it doesn't use cosmic rays), but for your purposes, they're random.

Computer random number routines usually return the same sequence of number each time you run your program, so you can reproduce your results.

You can override this by seeding the generator with a genuine random number from linux /dev/random.

2.8 and 2.9 p70 Fine points: Skip.

-

Review Bayes theorem, since it is important. Here is a fictitious (because none of these probilities have any justification) SETI example.

A priori probability of extraterrestrial life = P[L] = \(10^{-8}\).

For ease of typing, let L' be the complement of L.

Run a SETI experiment. R (for Radio) is the event that it has a positive result.

P[R|L] = \(10^{-5}\), P[R|L'] = \(10^{-10}\).

What is P[L|R] ?

-

Some specific probability laws

In all of these, successive events are independent of each other.

A Bernoulli trial is one toss of a coin where p is probability of head.

We saw binomial and multinomial probilities in class 4.

The binomial law gives the probability of exactly k heads in n tosses of an unfair coin.

The multinomial law gives the probability of exactly ki occurrances of the i-th face in n tosses of a die.

-

Example 2.28, p51. Chip quality control.

Each chip is either good or bad.

P[good]=(1-p), P[bad]=p.

If the chip is good: P[still alive at t] = \(e^{-at}\)

If the chip is bad: P[still alive at t] = \(e^{-1000at}\)

What's the probability that a random chip is still alive at t?

-

2.4.1, p52. Bayes' rule. This lets you invert the conditional probabilities.

-

\(B_j\) partition S. That means that

If \(i\ne j\) then \(B_i\cap B_j=\emptyset\) and

\(\bigcup_i B_i = S\)

\(P[B_j|A] = \frac{B_j\cap A}{P[A]}\) \(= \frac{P[A|B_j] P[B_j]}{\sum_k P[A|B_k] P[B_k]}\)

-

application:

We have a priori probs \(P[B_j]\)

Event A occurs. Knowing that A has happened gives us info that changes the probs.

Compute a posteriori probs \(P[B_j|A]\)

-

In the above diagram, what's the probability that an undergrad is an engineer?

Example 2.29 comm channel: If receiver sees 1, which input was more probable? (You hope the answer is 1.)

Example 2.30 chip quality control: For example 2.28, how long do we have to burn in chips so that the survivors have a 99% probability of being good? p=0.1, a=1/20000.

-

Example: False positives in a medical test

T = test for disease was positive; T' = .. negative

D = you have disease; D' = .. don't ..

P[T|D] = .99, P[T' | D'] = .95, P[D] = 0.001

P[D' | T] (false positive) = 0.98 !!!

4 Bayes theorem ctd

-

We'll do the examples.

We'll do these examples from Leon-Garcia in class.

-

Example 2.28, page 51. I'll use e=0.1.

Variant: Assume that P[A0]=.9. Redo the example.

Example 2.30, page 53, chip quality control: For example 2.28, how long do we have to burn in chips so that the survivors have a 99% probability of being good? p=0.1, a=1/20000.

-

Event A is that a random person has a lycanthopy gene. Assume P(A) = .01.

Genes-R-Us has a DNA test for this. B is the event of a positive test. There are false positives and false negatives each w.p. (with probability) 0.1. That is, P(B|A') = P(B' | A) = 0.1

What's P(A')?

What's P(A and B)?

What's P(A' and B)?

What's P(B)?

You test positive. What's the probability you're really positive, P(A|B)?

5 Chapter 2 ctd: Independent events

-

2.5 Independent events

\(P[A\cap B] = P[A] P[B]\)

P[A|B] = P[A], P[B|A] = P[B]

A,B independent means that knowing A doesn't help you with B.

Mutually exclusive events w.p.>0 must be dependent.

-

Example 2.33, page 56.

-

More that 2 events:

N events are independent iff the occurrence of no combo of the events affects another event.

Each pair is independent.

Also need \(P[A\cap B\cap C] = P[A] P[B] P[C]\)

This is not intuitive A, B, and C might be pairwise independent, but, as a group of 3, are dependent.

See example 2.32, page 55. A: x>1/2. B: y>1/2. C: x>y

Common application: independence of experiments in a sequence.

-

Example 2.34: coin tosses are assumed to be independent of each other.

P[HHT] = P[1st coin is H] P[2nd is H] P[3rd is T].

-

Example 2.35, page 58. System reliability

Controller and 3 peripherals.

System is up iff controller and at least 2 peripherals are up.

Add a 2nd controller.

2.6 p59 Sequential experiments: maybe independent

-

2.6.1 Sequences of independent experiments

Example 2.36

-

2.6.2 Binomial probability

Bernoulli trial flip a possibly unfair coin once. p is probability of head.

(Bernoulli did stats, econ, physics, ... in 18th century.)

-

Example 2.37

P[TTH] = \((1-p)^2 p\)

P[1 head] = \(3 (1-p)^2 p\)

Probability of exactly k successes = \(p_n(k) = {n \choose k} p^k (1-p)^{n-k}\)

\(\sum_{k=0}^n p_n(k) = 1\)

Example 2.38

Can avoid computing n! by computing \(p_n(k)\) recursively, or by using approximation. Also, in C++, using double instead of float helps. (Almost always you should use double instead of float. It's the same speed.)

Example 2.39

Example 2.40 Error correction coding

7 To watch

Rich Radke's Probability Bites:

Binomial and Geometric Practice Problems

Bernoulli's Theorem

Discrete Random Variables

https://www.youtube.com/playlist?list=PLuh62Q4Sv7BXkeKW4J_2WQBlYhKs_k-pj