CG Class 24, Thurs 2017-11-02

Table of .. contents::

1 Next week

- Prof Radke will talk on Mon.

- No class on Thurs.

2 Extended due dates

- Homework 6 now due today.

- Term project progress report due Mon.

3 Vive VR and Ricoh Theta V available

Salles now has the Vive running. In his opinion, it's better than the MS Holodeck.

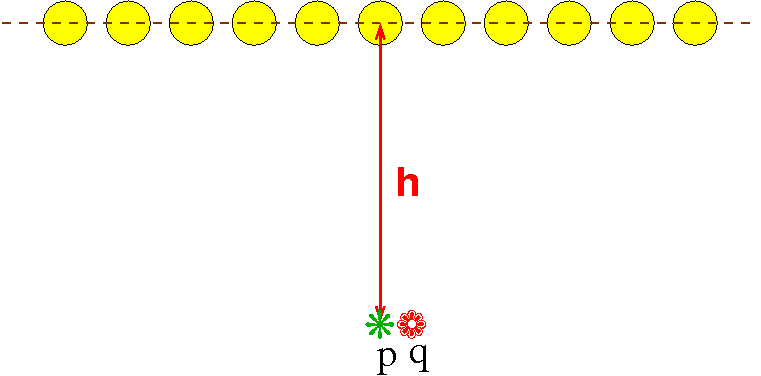

5 Why textures are hard to do

- ... although the idea is simple.

- You want to take each texel on each object, find which pixel it would draw on to, and draw it there.

- That's too expensive. Because of the projection, the projection of one texel is almost never the same size as a pixel.

- Sometimes several texels will project to the same pixel, so they have to be averaged together to get the pixel color.

- At other times, one texel will project over several pixels, so you want to blend pixel colors smoothly from one texel to the next texel.

- Most texels will be invisible in the final image. Even with today's computers, this wastes a lot of computing power.

- So, this is how it's really implemented.

- For each visible fragment, i.e., pixel, find which texel would draw there.

- This requires a backwards version of the graphics pipeline.

- The interesting surfaces are curved, where each parameter pair (u,v) uses a bicubic polynomial to generate an (x,y,z). You have to invert that.

- All this has to be computed at hundreds of millions of pixels per second.

- It usually utilizes special texture mapping hardware for speed.

6 Chapter 9 slides

-

Start of a big topic.