Engineering Probability Class 4 Mon 2020-01-27

Table of contents::

1 Probability in the real world - enrichment

Statistician Cracks Code For Lottery Tickets

Finding these stories is just too easy.

2 Chapter 2 ctd

-

Today: counting methods, Leon-Garcia section 2.3, page 41.

- We have an urn with n balls.

- Maybe the balls are all different, maybe not.

- W/o looking, we take k balls out and look at them.

- Maybe we put each ball back after looking at it, maybe not.

- Suppose we took out one white and one green ball. Maybe we care about their order, so that's a different case from green then white, maybe not.

-

Applications:

- How many ways can we divide a class of 12 students into 2 groups of 6?

- How many ways can we pick 4 teams of 6 students from a class of 88 students (leaving 64 students behind)?

- We pick 5 cards from a deck. What's the probability that they're all the same suit?

- We're picking teams of 12 students, but now the order matters since they're playing baseball and that's the batting order.

- We have 100 widgets; 10 are bad. We pick 5 widgets. What's the probability that none are bad? Exactly 1? More than 3?

- In the approval voting scheme, you mark as many candidates as you please. The candidate with the most votes wins. How many different ways can you mark the ballot?

- In preferential voting, you mark as many candidates as you please, but rank them 1,2,3,... How many different ways can you mark the ballot?

-

Leon-Garcia 2.3: Counting methods, pp 41-46.

- finite sample space

- each outcome equally probable

- get some useful formulae

- warmup: consider a multiple choice exam where 1st answer has 3 choices,

2nd answer has 5 choices and 3rd answer has 6 choices.

- Q: How many ways can a student answer the exam?

- A: 3x5x6

- If there are k questions, and the i-th question has \(n_i\) answers then the number of possible combinations of answers is \(n_1n_2 .. n_k\)

-

2.3.1 Sampling WITH replacement and WITH ordering

- Consider an urn with n different colored balls.

- Repeat k times:

- Draw a ball.

- Write down its color.

- Put it back.

- Number of distinct ordered k-tuples = \(n^k\)

-

Example 2.1.5. How many distinct ordered pairs for 2 balls from 5? 5*5.

-

Review. Suppose I want to eat one of the following 4 places, for tonight and again tomorrow, and don't care if I eat at the same place both times: Commons, Sage, Union, Knotty Pine. How many choices to I have where to eat?

- 16

- 12

- 8

- 4

- something else

-

2.3.2 Sampling WITHOUT replacement and WITH ordering

- Consider an urn with n different colored balls.

- Repeat k times:

- Draw a ball.

- Write down its color.

- Don't put it back.

- Number of distinct ordered k-tuples = n(n-1)(n-2)...(n-k+1)

-

Review. Suppose I want to visit two of the following four cities: Buffalo, Miami, Boston, New York. I don't want to visit one city twice, and the order matters. How many choices to I have how to visit?

- 16

- 12

- 8

- 4

- something else

-

Example 2.1.6: Draw 2 balls from 5 w/o replacement.

- 5 choices for 1st ball, 4 for 2nd. 20 outcomes.

- Probability that 1st ball is larger?

- List the 20 outcomes. 10 have 1st ball larger. P=1/2.

-

Example 2.1.7: Draw 3 balls from 5 with replacement. What's the probability they're all different?

- P = \(\small \frac{\text{# cases where they're different}}{\text{# cases where I don't care}}\)

- P = \(\small \frac{\text{# case w/o replacement}}{\text{# cases w replacement}}\)

- P = \(\frac{5*4*3}{5*5*5}\)

-

2.3.3 Permutations of n distinct objects

-

Distinct means that you can tell the objects apart.

-

This is sampling w/o replacement for k=n

-

1.2.3.4...n = n!

-

It grows fast. 1!=1, 2!=2, 3!=6, 4!=24, 5!=120, 6!=720, 7!=5040

-

Stirling approx:

\begin{equation*} n! \approx \sqrt{2\pi n} \left(\frac{n}{e}\right)^n\left(1+\frac{1}{12n}+...\right) \end{equation*} -

Therefore if you ignore the last term, the relative error is about 1/(12n).

-

-

Example 2.1.8. # permutations of 3 objects. 6!

-

Example 2.1.9. 12 airplane crashes last year. Assume independent, uniform, etc, etc. What's probability of exactly one in each month?

- For each crash, let the outcome be its month.

- Number of events for all 12 crashes = \(12^{12}\)

- Number of events for 12 crashes in 12 different months = 12!

- Probability = \(12!/(12^{12}) = 0.000054\)

- Random does not mean evenly spaced.

-

2.3.4 Sampling w/o replacement and w/o ordering

-

We care what objects we pick but not the order

-

E.g., drawing a hand of cards.

-

term: Combinations of k objects selected from n. Binomial coefficient.

\begin{equation*} C^n_k = {n \choose k} = \frac{n!}{k! (n-k)!} \end{equation*} -

Permutations is when order matters.

-

-

Example 2.20. Select 2 from 5 w/o order. \(5\choose 2\)

-

Example 2.21 # permutations of k black and n-k white balls. This is choosing k from n.

-

Example 2.22. 10 of 50 items are bad. What's probability 5 of 10 selected randomly are bad?

- # ways to have 10 bad items in 50 is \(50\choose 10\)

- # ways to have exactly 5 bad is 3 ways to select 5 good from 40 times # ways to select 5 bad from 10 = \({40\choose5} {10\choose5}\)

- Probability is ratio.

-

Multinomial coefficient: Partition n items into sets of size \(k_1, k_2, ... k_j, \sum k_i=n\)

\begin{equation*} \frac{n!}{k_1! k_2! ... k_j!} \end{equation*} -

2.3.5. skip

Reading: 2.4 Conditional probability, page 47-

3 Review questions

- Retransmitting a very noisy bit 2 times: The probability of each bit

going bad is 0.4. What is probability of no error at all in the 2

transmissions?

- 0.16

- 0.4

- 0.36

- 0.48

- 0.8

- Flipping an unfair coin 2 times: The probability of each toss being heads is 0.4. What is probability of both tosses being tails?

- 0.16

- 0.4

- 0.36

- 0.48

- 0.8

- Flipping a fair coin until we get heads: How many times will it take until the probability of seeing a head is >=.8?

- 1

- 2

- 3

- 4

- 5

- This time, the coin is weighted so that p[H]=.6. How many times will it take until the probability of seeing a head is >=.8?

- 1

- 2

- 3

- 4

- 5

4 Review

-

Followon to the meal choice review question. My friend and I wish to visit a hospital, chosen from: Memorial, AMC, Samaritan. We might visit different hospitals.

- If we don't care whether we visit the same hospital or not, in how many ways can we do this?

- 1

- 2

- 3

- 6

- 9

- We wish to visit different hospitals, to later write a Poly review. In how many ways can we visit different hospitals, where we care which hospital each of us visits?

- 1

- 2

- 3

- 6

- 9

- Modify the above, to say that we care only about the set of hospitals we two visit.

- 1

- 2

- 3

- 6

- 9

- We realize that Samaritan and Memorial are both owned by St Peters and we want to visit two different hospital chains to write our reviews. In how many ways can we pick hospitals so that we pick different chains?

- 1

- 2

- 3

- 4

- 5

- We each pick between Memorial and AMC with 50% probability, independently. What is the probability that each hospital is picked exactly once (in contrast to picking one twice and the other not at all).

- 0

- 1/4

- 1/2

- 3/4

- 1

- If we don't care whether we visit the same hospital or not, in how many ways can we do this?

5 Chapter 2 ctd

-

New stuff, pp. 47-66:

- Conditional probability - If you know that event A has occurred, does that change the probability that event B has occurred?

- Independence of events - If no, then A and B are independent.

- Sequential experiments - Find the probability of a sequence of experiments from the probabilities of the separate steps.

- Binomial probabilities - tossing a sequence of unfair coins.

- Multinomial probabilities - tossing a sequence of unfair dice.

- Geometric probabilities - toss a coin until you see the 1st head.

- Sequences of dependent experiments - What you see in step 1 influences what you do in step 2.

-

2.4 Conditional probability, page 47.

- big topic

- E.g., if it snows today, is it more likely to snow tomorrow? next week? in 6 months?

- E.g., what is the probability of the stock market rising tomorrow given that (it went up today, the deficit went down, an oil pipeline was blown up, ...)?

- What's the probability that a CF bulb is alive after 1000 hours given that I bought it at Walmart?

- definition \(P[A|B] = \frac{P[A\cap B]}{P[B]}\)

-

E.g., if DARPA had been allowed to run its Futures Markets Applied to Prediction (FutureMAP) would the future probability of King Zog I being assassinated be dependent on the amount of money bet on that assassination occurring?

- Is that good or bad?

- Would knowing that the real Zog survived over 55 assassination attempts change the probability of a future assassination?

-

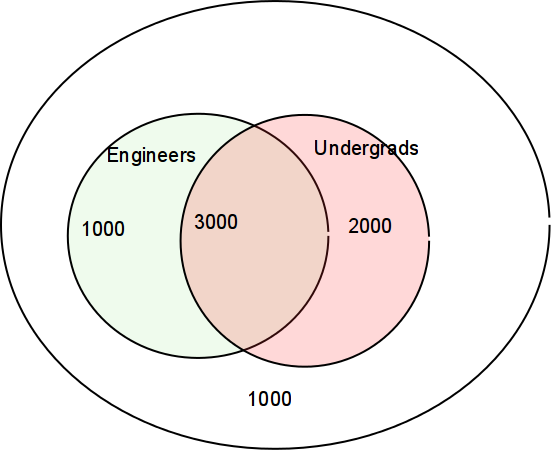

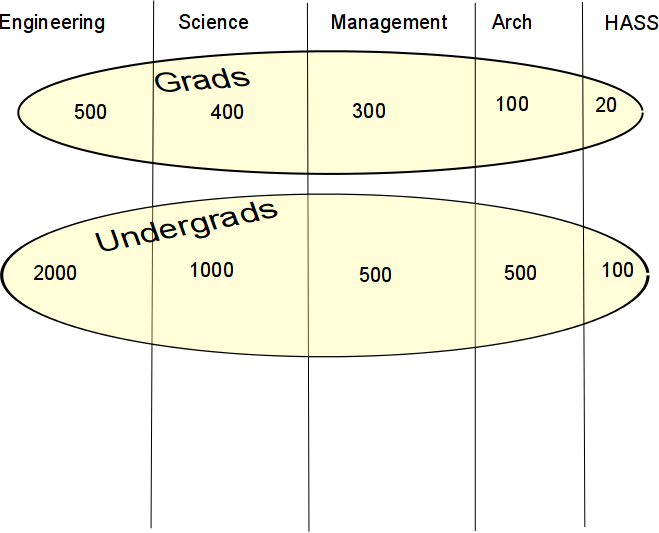

Consider a fictional university that has both undergrads and grads. It also has both Engineers and others:

-

Review: What's the probability that a student is an Engineer?

- 1/7

- 4/7

- 5/7

- 3/4

- 3/5

-

Review: What's the probability that a student is an Engineer, given that s/he is an undergrad?

- 1/7

- 4/7

- 5/7

- 3/4

- 3/5

-

\(P[A\cap B] = P[A|B]P[B] = P[B|A]P[A]\)

-

Example 2.26 Binary communication. Source transmits 0 with probability (1-p) and 1 with probability p. Receiver errs with probability e. What are probabilities of 4 events?

-

Total probability theorem

- \(B_i\) mutually exclusive events whose union is S

- P[A] = P[A \(\cap B_1\) + P[A \(\cap B_2\) + ...

- \(P[A] = P[A|B_1]P[B_1]\) \(+ P[A|B_2]P[B_2] + ...\)

What's the probability that a student is an undergrad, given ... (Numbers are fictitious.)

-

Example 2.28. Chip quality control.

- Each chip is either good or bad.

- P[good]=(1-p), P[bad]=p.

- If the chip is good: P[still alive at t] = \(e^{-at}\)

- If the chip is bad: P[still alive at t] = \(e^{-1000at}\)

- What's the probability that a random chip is still alive at t?

-

2.4.1, p52. Bayes' rule. This lets you invert the conditional probabilities.

-

\(B_j\) partition S. That means that

- If \(i\ne j\) then \(B_i\cap B_j=\emptyset\) and

- \(\bigcup_i B_i = S\)

- \(P[B_j|A] = \frac{B_j\cap A}{P[A]}\) \(= \frac{P[A|B_j] P[B_j]}{\sum_k P[A|B_k] P[B_k]}\)

- application:

- We have a priori probs \(P[B_j]\)

- Event A occurs. Knowing that A has happened gives us info that changes the probs.

- Compute a posteriori probs \(P[B_j|A]\)

-

\(B_j\) partition S. That means that

-

In the above diagram, what's the probability that an undergrad is an engineer?

-

Example 2.29 comm channel: If receiver sees 1, which input was more probable? (You hope the answer is 1.)

-

Example 2.30 chip quality control: For example 2.28, how long do we have to burn in chips so that the survivors have a 99% probability of being good? p=0.1, a=1/20000.

-

Example: False positives in a medical test

- T = test for disease was positive; T' = .. negative

- D = you have disease; D' = .. don't ..

- P[T|D] = .99, P[T' | D'] = .95, P[D] = 0.001

- P[D' | T] (false positive) = 0.98 !!!